Blog

Unlocking Database Performance: A Comprehensive Guide to Index Optimization

Stay informed on new product features, the latest in technology, solutions, and updates.

Sep 2, 2023

Introduction

In the realm of databases, SQL queries often deceive with their simple syntax, masking the complexities of execution beneath. The true performance of a query, such as a basic SELECT statement, isn't apparent through its structure alone but hinges on the execution plan devised by the database engine. This plan, influenced by factors like indexes and data volume, dictates the efficiency of data retrieval, making understanding these underlying mechanics crucial for optimizing database interactions.

The Deceptive Nature of SQL Queries

At first glance, the SQL query SELECT salary FROM emp WHERE id = 97 seems straightforward, suggesting a simple retrieval of data. However, the performance of this query can vary widely based on the database's configuration. Without an index on the id field, the database engine must perform a full table scan, shifting through every record, which can be time-consuming. Introducing an index on id transforms this process into a swift index scan, significantly reducing the time required. Further optimization, such as including salary in the index, can lead to an index-only scan, eliminating the need to access the table at all. Yet, adding millions of rows without proper maintenance can degrade performance, illustrating how database changes can dramatically affect query efficiency.

Understanding Indexes in PostgreSQL & Real-World Applications of Index Types

Choosing the right type of index in PostgreSQL can have a significant impact on the performance of your database operations. Here are real-life examples for each type of index, along with guidance on when to apply them:

1. B-Tree Indexes

B-Tree indexes are multi-level tree structures, where each level of the tree can be used as a doubly-linked list of pages.

Example Use Case: An online retail store's database where you need to query orders by customer ID, date range, or order amount. A B-Tree index on the customer_id, order_date, or total_amount columns can speed up these queries.

When to Use:

- When you need to support fast lookups, range queries, ordering, and sorting.

- Ideal for columns with high cardinality (unique or nearly unique values).

- When your queries use operators like

=,<,<=,>,>=, orBETWEEN. - Supports ordering, making them suitable for

ORDER BYand range queries.

2. Hash Indexes

Hash indexes store a 32-bit hash code derived from the value of the indexed column. Hence, such indexes can only handle simple equality comparisons.

Example Use Case: A session management system where sessions are looked up frequently by a unique session ID. A hash index on the session_id column can make these lookups more efficient.

When to Use:

- Primarily for equality comparisons (

=) where the column values are unique. - When the table is not involved in range queries or ordering operations on the indexed column.

- More space-efficient than B-Tree indexes for specific use cases involving only equality checks.

3. GiST Indexes

GiST stands for Generalized Search Tree. It is a balanced, tree-structured access method, that acts as a base template in which to implement arbitrary indexing schemes.

Example Use Case: A real estate application that allows users to search for properties within a certain geographical area. Using GiST indexes on spatial data enables efficient searches for properties within specified regions.

When to Use:

- For complex data types like geometric information, text, trees, and more.

- When you need to support "fuzzy" searches, spatial queries, or full-text search.

- Ideal for implementing custom search operations.

- Supports a wide range of data types and search strategies.

- Ideal for spatial data (using the

PostGISextension) and full-text search. - Can be used for indexing and searching based on "overlaps" or containment relationships.

4. GIN Indexes

GIN stands for Generalized Inverted Index. GIN is designed for handling cases where the items to be indexed are composite values, and the queries to be handled by the index need to search for element values that appear within the composite items.

Example Use Case: A document management system where documents are tagged with multiple keywords, and users need to search documents based on these tags. A GIN index on the tags array column can make these searches fast and efficient.

When to Use:

- When you need to index composite types like arrays, JSONB, or tsvector (for full-text search).

- Efficient for querying composite types (e.g., arrays, JSONB) and full-text search.

- Supports indexing elements within composite types, making searches within these elements fast.

5. BRIN Indexes

BRIN, or Block Range Index, is an index type in PostgreSQL designed to provide efficient indexing for large tables with sorted data. BRIN index contains the minimum and maximum in a group of database pages. BRIN index makes is the easiest way to optimize for speed.

Example Use Case: A climate monitoring system that stores years of temperature data. Data is inserted in chronological order. A BRIN index on the date column can efficiently summarize and access this large dataset.

When to Use:

- For very large tables where data is physically stored in sorted order according to the indexed column.

- When the table size is significantly larger than memory, and data access patterns benefit from summarization.

- Ideal for time-series data, log data, or any naturally ordered dataset.

- Highly space-efficient for large tables with natural ordering.

- Reduces I/O by allowing the database to skip over blocks of data that don't contain relevant values.

6. SP-GiST Indexes

SP-GiST is an abbreviation for space-partitioned GiST . SP-GiST supports partitioned search trees, which facilitate development of a wide range of different non-balanced data structures, such as quad-trees, k-d trees, and radix trees (tries).

Example Use Case: A telecommunications company that needs to manage and query a large dataset of phone numbers. SP-GiST can efficiently partition this space, making searches faster.

When to Use:

- For non-uniformly distributed data that can be divided into non-overlapping regions.

- When you need to implement custom partitioning schemes, like quad-trees or radix trees, for specialized search operations.

- Supports partitioning of search space, which is useful for non-balanced data distributions.

- Efficient for data that can be partitioned into non-overlapping regions, such as quad-trees or radix trees.

7. Covering Indexes

these are types of indexes that specify a list of columns to be included in the index as non-key columns. If used correctly, indexes with included columns improve performance and reduce total costs.

Example Use Case: A customer support system where queries frequently access a customer's name, email, and last contact date. A covering index including these columns can satisfy queries directly from the index without table lookups.

When to Use:

- When a query frequently accesses a small number of columns from a large table.

- To avoid table lookups by including all columns required by the query in the index.

- When the performance benefit of reading all necessary data directly from the index outweighs the cost of increased index size and maintenance.

- Reduces disk I/O by avoiding table lookups when the index contains all the fields required by a query.

- Can significantly speed up queries if the selected columns are included in the index.

Deciding on an Index

- Analyze Query Patterns: Look at the most frequent and resource-intensive queries to understand what columns are being queried and how (e.g., range queries, exact matches, joins).

- Consider the Data: The nature of the data (e.g., its type, distribution, and how it's modified) plays a significant role in deciding the index type.

- Understand the Trade-offs: Indexes speed up read operations but can slow down writes due to the additional overhead of maintaining the index. Consider the read-write balance of your application.

- Use EXPLAIN: PostgreSQL's

EXPLAINcommand can help you understand how queries are executed and where indexes might be beneficial. - Monitor and Adjust: Performance tuning is an iterative process. Monitor the impact of indexes on your application performance and adjust as necessary.

Selecting the right index involves understanding your application's specific requirements and testing to see what works best in your environment.

Best Practices

- Index Maintenance: Indexes can become fragmented over time, leading to degraded performance. Regular maintenance (e.g.,

REINDEX) can help maintain performance. - Index Overhead: While indexes can speed up query performance, they also add overhead to data modification operations (INSERT, UPDATE, DELETE) and consume additional disk space. It's essential to balance the need for quick reads against the cost of slower writes and increased storage.

- Monitoring and Analysis: Use tools like

EXPLAINandEXPLAIN ANALYZEto understand how indexes are being used and to identify potential performance bottlenecks.

Strategies for Debugging and Optimizing PostgreSQL Indexes

Let's delve into each of the strategies for debugging and optimizing indexes in PostgreSQL with practical examples:

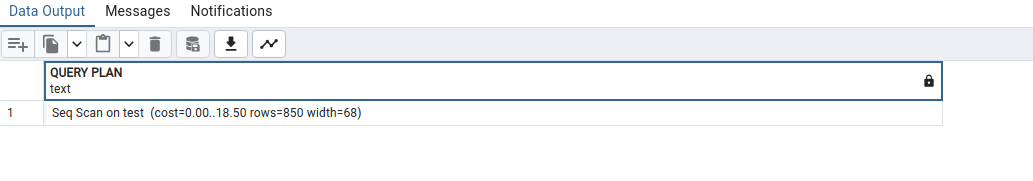

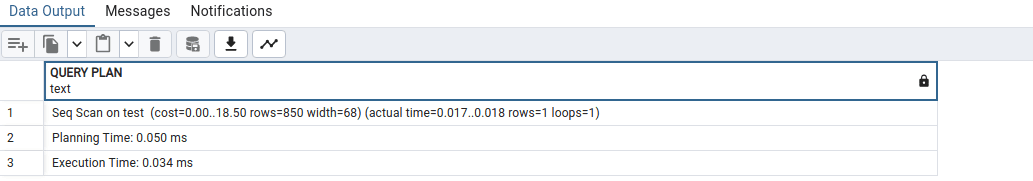

1. Use EXPLAIN and EXPLAIN ANALYZE

To understand how a query uses indexes, you can prepend EXPLAIN or EXPLAIN ANALYZE to your SQL query:

These commands will show the execution plan and, for EXPLAIN ANALYZE, the actual execution time and rows processed at each step. below is the output for the above statements, consecutively

2. Index Usage Statistics

To check if indexes are being used, look at the pg_stat_user_indexes view:

This query lists indexes that have never been used for scanning.

3. Monitor Long Running Queries

To find long-running queries, you can query the pg_stat_activity view:

This shows queries that have been running for more than 5 minutes.

4. Check for Unused or Redundant Indexes

To find unused indexes:

This query identifies non-unique indexes that have never been used for scans.

5. Consider the Impact of VACUUM and ANALYZE

Regularly run VACUUM and ANALYZE to maintain performance:

VACUUM cleans up dead tuples, and ANALYZE updates statistics used by the query planner. below are the responses, respectively

6. Index and Table Bloat

To check for bloat in tables and indexes, you can use the pgstattuple extension:

This provides detailed statistics about table and index bloat.

7. Use the pg_stat_statements Extension

First, ensure the pg_stat_statements module is enabled in your PostgreSQL configuration. Then, query its view to find inefficient queries:

This lists the top 10 time-consuming queries.

8. Review Index Types

Choose the right index type based on your data and query patterns. For example, to create a GIN index for array data:

9. Check for Index Scans vs. Bitmap Heap Scans

Use EXPLAIN to see whether a query uses an index scan or a bitmap heap scan:

The plan will show whether an index or bitmap heap scan is used.

10. Evaluate Partial Indexes

Create a partial index for queries that always include a specific condition:

This indexes only the rows that meet the specified condition.

11. Function-based Indexes

If queries often perform operations on columns, consider creating an index on the function or expression:

This is useful for case-insensitive searches on the column.

By applying these examples to your specific use cases, you can effectively debug and optimize the use of indexes in your PostgreSQL database, leading to significant performance improvements.

Managing Index Overhead: Strategies and Examples

Indexing is an essential aspect of database optimization, enhancing query performance and data retrieval speeds. However, the benefits of indexing come with their own set of trade-offs, primarily the additional overhead associated with maintaining these indexes. This overhead manifests in several ways, including increased space requirements and a potential impact on data insertion, updates, and deletion operations. Understanding how to manage this overhead is crucial for maintaining a balanced and efficient database system.

Understanding Index Overhead

Before diving into management strategies, it's important to understand the nature of index overhead. Indexes, while invaluable for speeding up read operations, essentially duplicate data and require additional disk space. This duplication means that every time a data manipulation operation occurs (such as an insert, update, or delete), the database must also update the indexes associated with the affected table, leading to increased I/O operations and potentially longer processing times.

Space Requirements

Every index you create adds to the storage footprint of your database. This is not just about the space that the index itself occupies; it also affects the database cache. More indexes mean that a larger portion of the cache is dedicated to indexing information, potentially reducing the efficiency of data caching and increasing the likelihood of cache misses.

Impact on Data Modification Operations

Inserts, updates, and deletes are directly impacted by the presence of indexes. Each operation requires corresponding updates to all associated indexes, which can significantly increase the total execution time for these operations:

- Inserts: When a new record is inserted, every index on the table must be updated. This includes adding a new entry to each index, which can be particularly costly for tables with many indexes or complex composite indexes.

- Updates: Updating a field that is part of one or more indexes requires those indexes to be modified. This can involve both removing the old entry and adding a new one, essentially doubling the work.

- Deletes: Deleting a record necessitates the removal of corresponding entries from each index, adding overhead to what would otherwise be a straightforward operation.

Strategies for Managing Index Overhead

Managing index overhead involves a delicate balance between optimizing query performance and minimizing the negative impacts of indexes on space and data modification operations. Here are several strategies, accompanied by examples, to effectively manage index overhead:

1. Regular Index Maintenance

Regular maintenance tasks such as reindexing and defragmenting can help optimize index storage and performance. PostgreSQL's REINDEX command, for example, rebuilds an index to eliminate bloat and ensure data is stored compactly:

2. Use Partial Indexes

Partial indexes index only a subset of a table's data, based on a specified condition. This approach can significantly reduce index size and maintenance overhead for tables where queries frequently target specific segments:

This index would only include active users, reducing the size and update overhead compared to a full-table index.

3. Choose Appropriate Index Types

Different types of indexes have different space and performance characteristics. For example, a BRIN (Block Range INdex) is particularly space-efficient for large tables with naturally ordered data, whereas a GIN (Generalized Inverted Index) index is better suited for indexing array or JSONB columns:

This BRIN index would be efficient for a table with a large number of rows and a column (timestamp) that is naturally ordered.

4. Index De-duplication

Analyze your indexes to identify and remove duplicates or near-duplicates, which add unnecessary overhead. Tools like pgAdmin or scripts can help identify redundant indexes that can be safely dropped.

5. Consider Index-Organized Tables

For tables where read performance is critical, and writes are less frequent, consider using index-organized tables (also known as clustered indexes in some RDBMS). This approach stores table data directly within the index, eliminating the need for separate index lookups during queries but can increase overhead for write operations.

6. Monitor and Analyze Index Usage

Regularly monitor index usage to identify unused or rarely used indexes. PostgreSQL provides the pg_stat_user_indexes view to track index usage statistics:

This query identifies indexes that have been scanned less than 50 times, potentially indicating candidates for removal.

7. Optimize Index Columns

Limit the number of columns in a composite index and order them based on their selectivity and usage in query conditions. The most selective columns, or those most frequently used in WHERE clauses, should come first.

8. Limit Indexes on Highly Volatile Tables

For tables subject to frequent inserts, updates, and deletes, minimize the number of indexes. Evaluate each index's impact on write

Practical Tips and Best Practices for Database Indexing

Effective database indexing is a cornerstone of high-performance applications. It not only accelerates data retrieval but also ensures efficient data manipulation. However, improper indexing can lead to increased storage requirements, slower write operations, and suboptimal query performance. This comprehensive guide provides practical tips and best practices for creating, combining, and dropping indexes, along with leveraging tools like EXPLAIN ANALYZE to fine-tune database performance. We'll illustrate these concepts with examples and link to official documentation for deeper insights.

When to Create Indexes

1. High-Read Tables

Create indexes on tables that are frequently queried. This improves read performance by reducing the need for full table scans. For instance, if you often query a users table by username, an index on the username column would be beneficial:

PostgreSQL Documentation on CREATE INDEX

2. Columns Used in JOIN Conditions

Indexes on columns that are frequently used in JOIN conditions can significantly improve the performance of JOIN operations. For example, if you regularly join orders and customers tables on the customer_id column, ensure both tables have indexes on these columns.

3. Columns Used in WHERE Clauses

Columns that frequently appear in WHERE clauses are prime candidates for indexing. This enables the database to quickly locate rows that match the query criteria without scanning the entire table.

4. Columns Used for Sorting

Columns that are often used in ORDER BY clauses should be indexed. This can dramatically reduce sorting overhead for query results, as the database can use the index to efficiently order the rows.

When to Combine Indexes

1. Composite Indexes for Multi-Column Queries

When queries frequently filter or sort on multiple columns together, consider creating a composite index. The order of columns in the index should reflect their order and frequency of use in queries:

This index would be effective for queries filtering by city and state together.

Composite Indexes in PostgreSQL

2. Consider Index Scans vs. Bitmap Heap Scans

Use EXPLAIN to determine whether queries are using index scans or bitmap heap scans. Composite indexes are more likely to result in index scans, which are generally faster but can be more memory-intensive.

When to Drop Indexes

1. Unused Indexes

Regularly review index usage and consider dropping indexes that are not used. You can identify unused indexes by querying the pg_stat_user_indexes view in PostgreSQL:

Dropping unused indexes can reduce storage and maintenance overhead.

Monitoring Index Usage in PostgreSQL

2. Redundant Indexes

If multiple indexes exist on the same column(s), evaluate their necessity. Redundant indexes increase the maintenance cost without providing additional benefits.

Optimizing Database Performance with EXPLAIN ANALYZE

1. Understanding Query Plans

Use EXPLAIN ANALYZE to understand how PostgreSQL executes your queries. This tool provides detailed insights into the query execution plan, including whether indexes are used, the types of scans performed, and the cost associated with each operation:

This output helps identify performance bottlenecks and opportunities for optimization.

2. Analyzing Join Performance

For queries involving joins, EXPLAIN ANALYZE can show you how tables are joined and whether indexes are effectively used. This information can guide index creation on foreign key columns or frequently joined fields.

3. Evaluating Sort Operations

EXPLAIN ANALYZE results indicate if a query's sort operation is performed in memory or requires disk writes (a condition known as "sort spill"). Indexing the sorted columns can often eliminate the need for sort spill, improving query performance.

4. Tuning Sequential Scans

Sometimes, EXPLAIN ANALYZE reveals that a sequential scan is used instead of an index scan. This can be due to various reasons, such as low cardinality or outdated statistics. In such cases, updating table statistics with ANALYZE or creating more suitable indexes can help.

Best Practices for Index Management

-

Regular Maintenance

Periodically reindex and vacuum your database to maintain index efficiency and reduce bloat. PostgreSQL's

VACUUMandREINDEXcommands are essential for this purpose.Example:

Regular maintenance ensures that indexes remain in optimal condition, reducing the likelihood of performance degradation.

-

Limit Indexes on Heavily Updated Tables

For tables that undergo frequent updates, limit the number of indexes. Each update operation requires corresponding modifications to the associated indexes, increasing write overhead. Evaluate the trade-off between read and write performance for such tables.

-

Use Indexes Judiciously for Large Text/BLOB Columns

Be cautious when indexing large text or BLOB (Binary Large Object) columns, as this can significantly increase storage requirements. Consider whether full-text search solutions or specialized indexing techniques are more suitable for your use case.

-

Avoid Over-Indexing

Avoid creating too many indexes on a single table. Each index consumes storage space and adds overhead to data modification operations. Evaluate the necessity of each index and prioritize based on query patterns.

-

Keep Indexes and Tables in Sync

Ensure that index definitions match the corresponding table structure. Changes to the table, such as column additions or deletions, may require corresponding adjustments to indexes.

-

Test Index Impact on Write Operations

Before creating new indexes, especially on tables with high write loads, perform benchmarking to assess the impact on insert, update, and delete operations. Balancing read and write performance is crucial.

-

Regularly Review and Refine Indexing Strategy

Database workloads can evolve over time. Regularly review and refine your indexing strategy to adapt to changing query patterns and performance requirements. Tools like database profiling can provide insights into query behavior.

Conclusion

Effective database indexing is a dynamic and iterative process that requires a deep understanding of your application's requirements and query patterns. By following these practical tips and best practices, you can strike the right balance between optimizing read performance and minimizing the overhead associated with indexes. Regularly monitoring and maintaining indexes is equally important to ensure long-term database performance and efficiency.

Remember that while these best practices provide valuable guidelines, they should be tailored to your specific database system and application needs. Always consult the official documentation of your chosen database management system for detailed and up-to-date guidance. Continuously optimizing and fine-tuning your database indexing strategy can lead to significant improvements in overall application performance and user satisfaction.